OpenStack Cloud Computing Cookbook

http://www.openstackcookbook.com/

Category Archives: Recipes

May the fourth be with you!

Posted by on January 29, 2018

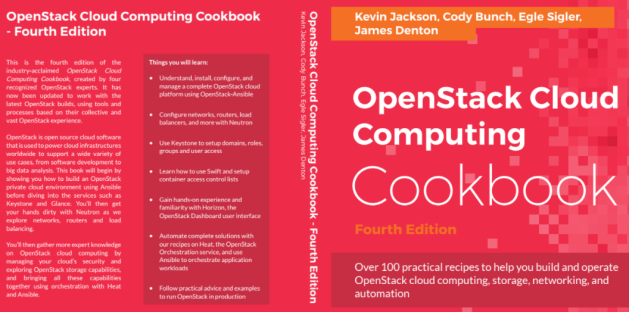

It is with immense pleasure that we bring to you the 4th Edition of the OpenStack Cloud Computing Cookbook by Packt Publishing!

Brought to you by 4 talented authors from Rackspace: Kevin Jackson, Cody Bunch, Egle Sigler and James Denton; a host of tenacious technical reviewers: Christian Ashby, Stefano Canepa, Ricky Donato, Geoff Higginbottom, Andy McCrae, and Wojciech Sciesinski; and a Packt team keeping us in check!

We have listened to your feedback and attempted to bend as many rules as we can to bring you a book that will help you understand, deploy and operate an OpenStack cloud environment.

When the 1st Edition was written in 2012, I didn’t think I’d still be updating it today to make a 4th Edition! Thank you to everyone involved. To the clever people that helped bring this to fruition, I thank you. To our readers – please enjoy!

UK Amazon: http://amzn.to/2mStv8e

Packt: https://www.packtpub.com/virtualization-and-cloud/openstack-cloud-computing-cookbook-fourth-edition

Installing and Configuring OpenLDAP

Posted by on August 17, 2015

In order to operate OpenStack Identity service with an external authentication source, it is necessary that one have an external authentication service available. In the OpenStack Cloud Computing Cookbook, we used OpenLDAP. As installing and configuring OpenLDAP is beyond the scope of the book, that information is provided here.

Getting ready

We will be performing an installation and configuration of OpenLDAP on it’s own Ubuntu 14.04 server.

How to do it…

We will break this into two steps: installing OpenLDAP, and configuring it for use with OpenStack.

Installing OpenLDAP

Once you are logged in, to your Ubuntu 14.04 node, run the following commands to install OpenLDAP:

We set the Ubuntu installer to non-interactive, as we will be providing the configuration values for OpenLDAP prior to installation:

export DEBIAN_FRONTEND=noninteractive

Next we provide an admin password so OpenLDAP will install:

echo -e " \ slapd slapd/internal/generated_adminpw password openstack slapd slapd/password2 password openstack slapd slapd/internal/adminpw password openstack slapd slapd/password1 password openstack " | sudo debconf-set-selections

Finally, we install OpenLDAP via slapd package:

sudo apt-get install -y slapd ldap-utils

Configuring

OpenStack has a few requirements regarding which attribute types are used for user information. To accomodate this in our OpenLDAP we need to add these values to the new-attributes schema file:

sudo echo " attributetype ( 1.2.840.113556.1.4.8 NAME 'userAccountControl' SYNTAX '1.3.6.1.4.1.1466.115.121.1.27' )

objectclass ( 1.2.840.113556.1.5.9 NAME 'user' DESC 'a user' SUP inetOrgPerson STRUCTURAL MUST ( cn ) MAY ( userPassword $ memberOf $ userAccountControl ) )

" >> /etc/ldap/schema/new-attributes.schema

Finally, restart OpenLDAP:

sudo service slapd restart

How it works…

What we have done here is install OpenLDAP on Ubuntu 14.04. Additionally we created an LDAP schema, configuring the userAccountControl property, and configuring a ‘user’ object to provide login authorization.

Pre-Requisites for the OpenStack Cloud Computing Cookbook lab

Posted by on June 9, 2015

The OpenStack Cloud Computing Cookbook has been written in such a way so that our readers can follow each section to understand, install and configure each component of the OpenStack environment. We cover Compute (Nova), Identity (Keystone), Image (Glance), Networking (Neutron), Storage (Cinder and Swift) as well as many other services such as how to install these components using Ansible. As such, there are elements of the OpenStack environment that don’t fit in any particular chapter. These supporting services are:

- Messaging (RabbitMQ)

- Database (MariaDB)

- NTP

- NTP is required on each host in the environment. For details of configuring NTP, visit https://help.ubuntu.com/lts/serverguide/NTP.html

OpenStack clients installation on Ubuntu for the OpenStack Cloud Computing Cookbook

Posted by on March 28, 2015

Throughout the OpenStack Cloud Computing Cookbook we expect the reader to have access to the client tools required to operate an OpenStack environment. If these are not installed, they can be installed by following this simple guide.

This guide will cover installation of

- Nova Client

- Keystone Client

- Neutron Client

- Glance Client

- Cinder Client

- Swift Client

- Heat Client

Getting ready

To use the tools and this guide, you are expected to have access to a Ubuntu (preferably 14.04 LTS) server or PC that has access to the network where you are installing OpenStack.

How to do it…

To install the clients, simply execute the following commands

sudo apt-get update

sudo apt-get install python-novaclient python-neutronclient python-glanceclient \

python-cinderclient python-swiftclient python-heatclient

Once these are installed, we can configure our CLI shell environment with the appropriate environment variables to allow us to communicate with the OpenStack endpoints.

A typical set of environment variables are as follows and is used extensively throughout the book when operating OpenStack as a user of the services:

export OS_TENANT_NAME=cookbook export OS_USERNAME=admin export OS_PASSWORD=openstack export OS_AUTH_URL=https://192.168.100.200:5000/v2.0/ export OS_NO_CACHE=1 export OS_KEY=/vagrant/cakey.pem export OS_CACERT=/vagrant/ca.pem

Typically these export lines are written to a file, for example called ‘$home/openrc’ that allows a user to simply execute the following command to source in these to use with OpenStack

source openrc

(or in Bash: . openrc)

Configuring Keystone for the first time

To initially configure Keystone, we utilize the SERVICE_TOKEN and SERVICE_ENDPOINT environment variables. The SERVICE_TOKEN is found in /etc/keystone/keystone.conf and should only be used for bootstrapping Keystone. Set the environment up as follows

export ENDPOINT=192.168.100.200

export SERVICE_TOKEN=ADMIN

export SERVICE_ENDPOINT=https://${ENDPOINT}:35357/v2.0

export OS_KEY=/vagrant/cakey.pem

export OS_CACERT=/vagrant/ca.pem

This bypasses the usual authentication process to allow services and users to be configured in Keystone before the users and passwords exist.

How it works…

The OpenStack command line tools utilize environment variables to know how to interact with OpenStack. The environment variables are easy to understand in terms of their function. A user is able to control multiple environments by simply changing the relevant environment variables.

To initially install the users and services, a SERVICE_TOKEN must be used as at this first stage there are no users in the Keystone database to assign administrative privileges to. Once the initial users and services has been set up, the SERVICE_TOKEN should not be used unless maintenance and troubleshooting calls for it.

Installing MariaDB for OpenStack Cloud Computing Cookbook

Posted by on March 28, 2015

The examples in the OpenStack Cloud Computing Cookbook assumes you have a suitable database backend configured to run the OpenStack services. This didn’t fit with any single chapter or service as they all rely on something like MariaDB or MySQL. If you don’t have this installed, follow these steps which you should be able to copy and paste to run in your environment.

Getting ready

We will be performing an installation and configuration of MariaDB on the Controller node that is shown in the diagram. MariaDB and MySQL are interchangeable in terms of providing the necessary MySQL database connections required for OpenStack. More information can be found at the MariaDB website. In the examples through the book, the IP address of the Controller that this will be on, and will be used by the services in the book, will be 172.16.0.200.

OpenStack Cloud Computing Cookbook Lab Environment

How to do it…

To install MariaDB, carry out the following steps as root

Tip: A script is provided here for you to run the commands below

- We first set some variables that will be used in the subsequent steps. This allows you to edit to suit your own environment.

export MYSQL_HOST=172.16.0.200 export MYSQL_ROOT_PASS=openstack export MYSQL_DB_PASS=openstack

- We then set some defaults in debconf to avoid any interactive prompts

echo "mysql-server-5.5 mysql-server/root_password password $MYSQL_ROOT_PASS" | sudo debconf-set-selections echo "mysql-server-5.5 mysql-server/root_password_again password $MYSQL_ROOT_PASS" | sudo debconf-set-selections echo "mysql-server-5.5 mysql-server/root_password seen true" | sudo debconf-set-selections echo "mysql-server-5.5 mysql-server/root_password_again seen true" | sudo debconf-set-selections

- We then install the required packages with the following command

sudo apt-get -y install mariadb-server python-mysqldb

- We now tell MariaDB to listen on all interfaces as well as set a max connection limit. Note, edit to suit the security and requirements in your environment.

sudo sed -i "s/^bind\-address.*/bind-address = 0.0.0.0/g" /etc/mysql/my.cnf sudo sed -i "s/^#max_connections.*/max_connections = 512/g" /etc/mysql/my.cnf

- To speed up MariaDB as well as help with permissions, add the following line to /etc/mysql/conf.d/skip-name-resolve.cnf

echo "[mysqld] skip-name-resolve" > /etc/mysql/conf.d/skip-name-resolve.cnf

- We configure UTF-8 with the following

echo "[mysqld] collation-server = utf8_general_ci init-connect='SET NAMES utf8' character-set-server = utf8" > /etc/mysql/conf.d/01-utf8.cnf

- We pick up the changes made by restarting MariaDB with the following command

sudo service mysql restart

- We now ensure the root user has the correct permissions to allow us to create further databases and users

mysql -u root -p${MYSQL_ROOT_PASS} -h localhost -e "GRANT ALL ON *.* to root@\"localhost\" IDENTIFIED BY \"${MYSQL_ROOT_PASS}\" WITH GRANT OPTION;" mysql -u root -p${MYSQL_ROOT_PASS} -h localhost -e "GRANT ALL ON *.* to root@\"${MYSQL_HOST}\" IDENTIFIED BY \"${MYSQL_ROOT_PASS}\" WITH GRANT OPTION;" mysql -u root -p${MYSQL_ROOT_PASS} -h localhost -e "GRANT ALL ON *.* to root@\"%\" IDENTIFIED BY \"${MYSQL_ROOT_PASS}\" WITH GRANT OPTION;"

- We run the following command to pick up the permission changes

mysqladmin -uroot -p${MYSQL_ROOT_PASS} flush-privileges

How it works…

What we have done here is install and configure MariaDB on our Controller node that is hosted with address 172.16.0.200. When we configure our OpenStack services that required a database connection, they will use the address format mysql://user:password@172.16.0.200/service.

See Also

The 3rd Edition of the OpenStack Cloud Computing Cookbook covers installation of highly available MariaDB with Galera

Configuring Ubuntu Cloud Archive for OpenStack

Posted by on March 28, 2015

Ubuntu 14.04 LTS, the release used throughout this book, provides two repositories for installing OpenStack. The standard repository ships with the Icehouse release of OpenStack. Whereas a further supported repository, called the Ubuntu Cloud Archive, provides access to the latest release (at time of writing), Juno. We will be performing an installation and configuration of OpenStack Identity service (as well as the rest of the OpenStack services) with packages from the Ubuntu Cloud Archive to provide us with the Juno release of software.

Getting ready

Ensure you have a suitable server available for installation of the OpenStack Identity service components. If you are using the accompanying Vagrant environment as described in the Preface this will be the controller node that we will be using.

Ensure you are logged onto the controller node and that it has Internet access to allow us to install the required packages in our environment for running Keystone. If you created this node with Vagrant, you can execute the following command:

vagrant ssh controller

How to do it…

Carry out the following steps to configure Ubuntu 14.04 LTS to use the Ubuntu Cloud Archive:

- To access the Ubuntu Cloud Archive repository, we first install the Ubuntu Cloud Archive Keyring and enable Personal Package Archives within Ubuntu as follows:

sudo apt-get update sudo apt-get install -y software-properties-common ubuntu-cloud-keyring

- Next we enable the Ubuntu Cloud Archive for OpenStack Juno. We do this as follows:

sudo add-apt-repository -y cloud-archive:juno sudo apt-get update

How it works…

What we’re doing here is adding an extra repository to our system that provides us with a tested set of packages of OpenStack that is fully supported on Ubuntu 14.04 LTS release. The packages in here will then be ones that will be used when we perform installation of OpenStack on our system.

There’s more…

More information about the Ubuntu Cloud Archive can be found by visiting the following address: https://wiki.ubuntu.com/ServerTeam/CloudArchive. This explains the release process and the ability to use latest releases of OpenStack—where new versions are released every 6 months—on a long term supported release of Ubuntu that gets released every 2 years.

Using an alternative release

If you wish to optionally deviate from stable releases, it is appropriate when you are helping to develop or debug OpenStack, or require functionality that is not available in the current release.

To use a particular release of PPA, for example, the next OpenStack release Kilo, we issue the following command:

sudo add-apt-repository cloud-archive:kilo

Creating a Sandbox Environment for the OpenStack Cloud Computing Cookbook

Posted by on October 31, 2014

Creating a sandbox environment using VirtualBox (or VMware Fusion) and Vagrant allows us to discover and experiment with the OpenStack services. VirtualBox gives us the ability to spin up virtual machines and networks without affecting the rest of our working environment, and is freely available at http://www.virtualbox.org for Windows, Mac OS X, and Linux. Vagrant allows us to automate this task, meaning we can spend less time creating our test environments and more time using OpenStack. This test environment can then be used for the rest of the OpenStack Cloud Computing Cookbook.

It is assumed that the computer you will be using to run your test environment in has enough processing power that has hardware virtualization support (for example, Intel VT-X and AMD-V support) with at least 8 GB RAM. Remember we’re creating a virtual machine that itself will be used to spin up virtual machines, so the more RAM you have, the better.

Getting ready

To begin with, we must download VirtualBox from http://www.virtualbox.org/ and then follow the installation procedure once this has been downloaded.

We also need to download and install Vagrant, which will be covered in the later part.

The steps throughout the book assume the underlying operating system that will be used to install OpenStack on will be Ubuntu 14.04 LTS release.

We don’t need to download a Ubuntu 14.04 ISO as we use our Vagrant environment do this for us.

How to do it…

To create our sandbox environment within VirtualBox we will use Vagrant to define a number of virtual machines that allows us to run all of the OpenStack services used in the OpenStack Cloud Computing Cookbook.

controller = Controller services (APIs + Shared Services)

network = OpenStack Network node

compute = OpenStack Compute (Nova) for running KVM instances

swift = OpenStack Object Storage (All-In-One) installation

cinder = OpenStack Block Storage node

These virtual machines will be configured with at an appropriate amount of RAM, CPU and Disk, and have a total of four network interfaces. Vagrant automatically setups an interface on our virtual machine that will NAT (Network Address Translate) traffic out, allowing our virtual machine to connect to the network outside of VirtualBox to download packages. This NAT interface is not mentioned in our Vagrantfile but will be visible on our virtual machine as eth0. A Vagrantfile, which is found in the working directory of our virtual machine sandbox environment, is a simple file that describes our virtual machines and how VirtualBox will create them. We configure our first interface for use in our OpenStack environment, which will be the host network interface of our OpenStack virtual machines (the interface a client will connect to Horizon, or use the API), a second interface will be for our private network that OpenStack Compute uses for internal communication between different OpenStack Compute hosts and a third which will be used when we look at Neutron networking as an external provider network. When these virtual machines become available after starting them up, you will see the four interfaces that are explained below:

eth0 = VirtualBox NAT

eth1 = Host Network

eth2 = Private (or Tenant) Network (host-host communication for Neutron created networks)

eth3 = Neutron External Network (when creating an externally routed Neutron network)

Carry out the following steps to create a virtual machine with Vagrant that will be used to run the OpenStack services:

- Install VirtualBox from http://www.virtualbox.org/The book was written using VirtualBox version 4.3.18

- Install Vagrant from http://www.vagrantup.com/The book was written using Vagrant version 1.6.5

- Once installed, we can define our virtual machine and networking in a file called Vagrantfile. To do this, create a working directory (for example, “~/cookbook” and edit a file in here called Vagrantfile as shown in the following command snippet:

mkdir ~/cookbook cd ~/cookbook vim Vagrantfile

- We can now proceed to configure Vagrant by editing the ~/cookbook/Vagrantfile file with the following code:

# -*- mode: ruby -*- # vi: set ft=ruby : # We set the last octet in IPV4 address here nodes = { 'controller' => [1, 200], 'network' => [1, 202], 'compute' => [1, 201], 'swift' => [1, 210], 'cinder' => [1, 211], } Vagrant.configure("2") do |config| # Virtualbox config.vm.box = "trusty64" config.vm.box_url = "http://cloud-images.ubuntu.com/vagrant/trusty/current/trusty-server-cloudimg-amd64-vagrant-disk1.box" config.vm.synced_folder ".", "/vagrant", type: "nfs" # VMware Fusion / Workstation config.vm.provider "vmware_fusion" do |vmware, override| override.vm.box = "trusty64_fusion" override.vm.box_url = "https://oss-binaries.phusionpassenger.com/vagrant/boxes/latest/ubuntu-14.04-amd64-vmwarefusion.box" override.vm.synced_folder ".", "/vagrant", type: "nfs" # Fusion Performance Hacks vmware.vmx["logging"] = "FALSE" vmware.vmx["MemTrimRate"] = "0" vmware.vmx["MemAllowAutoScaleDown"] = "FALSE" vmware.vmx["mainMem.backing"] = "swap" vmware.vmx["sched.mem.pshare.enable"] = "FALSE" vmware.vmx["snapshot.disabled"] = "TRUE" vmware.vmx["isolation.tools.unity.disable"] = "TRUE" vmware.vmx["unity.allowCompostingInGuest"] = "FALSE" vmware.vmx["unity.enableLaunchMenu"] = "FALSE" vmware.vmx["unity.showBadges"] = "FALSE" vmware.vmx["unity.showBorders"] = "FALSE" vmware.vmx["unity.wasCapable"] = "FALSE" end # Default is 2200..something, but port 2200 is used by forescout NAC agent. config.vm.usable_port_range= 2800..2900 nodes.each do |prefix, (count, ip_start)| count.times do |i| hostname = "%s" % [prefix, (i+1)] config.vm.define "#{hostname}" do |box| box.vm.hostname = "#{hostname}.book" box.vm.network :private_network, ip: "172.16.0.#{ip_start+i}", :netmask => "255.255.0.0" box.vm.network :private_network, ip: "172.10.0.#{ip_start+i}", :netmask => "255.255.0.0" box.vm.network :private_network, ip: "192.168.100.#{ip_start+i}", :netmask => "255.255.255.0" # If using Fusion box.vm.provider :vmware_fusion do |v| v.vmx["memsize"] = 1024 if prefix == "compute" or prefix == "controller" or prefix == "swift" v.vmx["memsize"] = 2048 end # if end # box.vm fusion # Otherwise using VirtualBox box.vm.provider :virtualbox do |vbox| # Defaults vbox.customize ["modifyvm", :id, "--memory", 1024] vbox.customize ["modifyvm", :id, "--cpus", 1] vbox.customize ["modifyvm", :id, "--nicpromisc3", "allow-all"] vbox.customize ["modifyvm", :id, "--nicpromisc4", "allow-all"] if prefix == "compute" or prefix == "controller" or prefix == "swift" vbox.customize ["modifyvm", :id, "--memory", 2048] vbox.customize ["modifyvm", :id, "--cpus", 2] end # if end # box.vm virtualbox end # config.vm.define end # count.times end # nodes.each end # Vagrant.configure("2") - We are now ready to power on our controller node. We do this by simply running the following command:

vagrant up

Congratulations! We have successfully created the VirtualBox virtual machines running on Ubuntu 14.04 which is able to run OpenStack services.

How it works…

What we have done is defined a number of virtual machines within VirtualBox or VMware Fusion by defining it in Vagrant. Vagrant then configures these virtual machines, based on the settings given in Vagrantfile in the directory where we want to store and run our VirtualBox or VMware Fusion virtual machines from. This file is based on Ruby syntax, but the lines are relatively self-explanatory. We have specified some of the following:

- The hostnames are called controller, network, compute, swift and cinder and have a corresponding 4th octet IP assigned to them that i is appended to the networks given further into the file.

- The VM is based on Ubuntu Trusty Tahr, an alias for Ubuntu 14.04 LTS 64-bit

- We configure some optimizations and specific configurations for VMware and VirtualBox

- The file has been written as a series of nested loops, iterating over the “nodes” array set at the top of the file.

- In each iteration, the corresponding configuration of the virtual machine is made, and then the configured virtual machine is then brought up.

We then launch this virtual machines using Vagrant with the help of the following simple command:

vagrant up

This will launch all VMs listed in the Vagrantfile.

To see the status of the virtual machines we use the following command:

vagrant status

To log into any of the machines we use the following command:

vagrant ssh controller

replace “controller” with the name of the virtual machine you want to use.

Installing Rackspace Private Cloud using Chef Cookbooks

Posted by on February 4, 2014

What It Does

In this recipe we show you how to install Rackspace Private Cloud on 3 servers: 2 Controllers in HA and a Compute host.

Getting Ready

You will need

- a Chef server installed and configured

- 3 Servers (virtual or physical) running Ubuntu 12.04

Ensure you are on a client or server that has the Chef Client, knife, installed and configured to use your Chef Server.

How to do it…

#!/usr/bin/env bash

set -e

set -v

set -u

# This is a crude script which will deploy an openstack HA environment

# YOU have to populate the IP addresses for Controller 1 and 2 as well as

# The IP addresses for your compute nodes. Additionally you will need to

# Populate the VIP_PREFIX with the first three octets of your VIP addresses.

# You should run this script on the node that will become controller 1.

# Rabbit Password

RMQ_PW="Passw0rd"

# Rabbit IP address, this should be the host ip which is on

# the same network used by your management network

RMQ_IP="10.51.50.1"

# Set the cookbook version that we will upload to chef

COOKBOOK_VERSION="v4.2.1"

# SET THE NODE IP ADDRESSES

CONTROLLER1="10.51.50.1"

CONTROLLER2="10.51.50.2"

# ADD ALL OF THE COMPUTE NODE IP ADDRESSES, SPACE SEPERATED.

COMPUTE_NODES="10.51.50.3 10.51.50.4"

# This is the VIP prefix, IE the beginning of your IP addresses for all your VIPS.

# Note, This makes a lot of assumptions for your VIPS.

# The environment use .154, .155, .156 for your HA VIPS.

VIP_PREFIX="10.51.50"

# Make the system key used for bootstrapping self and others.

if [ ! -f "/root/.ssh/id_rsa" ];then

ssh-keygen -t rsa -f /root/.ssh/id_rsa -N ''

pushd /root/.ssh/

cat id_rsa.pub | tee -a authorized_keys

popd

fi

for node in ${CONTROLLER1} ${CONTROLLER2} ${COMPUTE_NODES};do

ssh-copy-id ${node}

done

apt-get update

apt-get install -y python-dev python-pip git erlang erlang-nox erlang-dev curl lvm2

pip install git+https://github.com/cloudnull/mungerator

RABBIT_URL="http://www.rabbitmq.com"

function rabbit_setup() {

if [ ! "$(rabbitmqctl list_vhosts | grep -w '/chef')" ];then

rabbitmqctl add_vhost /chef

fi

if [ "$(rabbitmqctl list_users | grep -w 'chef')" ];then

rabbitmqctl delete_user chef

fi

rabbitmqctl add_user chef "${RMQ_PW}"

rabbitmqctl set_permissions -p /chef chef '.*' '.*' '.*'

}

function install_apt_packages() {

RABBITMQ_KEY="${RABBIT_URL}/rabbitmq-signing-key-public.asc"

wget -O /tmp/rabbitmq.asc ${RABBITMQ_KEY};

apt-key add /tmp/rabbitmq.asc

RABBITMQ="${RABBIT_URL}/releases/rabbitmq-server/v3.1.5/rabbitmq-server_3.1.5-1_all.deb"

wget -O /tmp/rabbitmq.deb ${RABBITMQ}

dpkg -i /tmp/rabbitmq.deb

rabbit_setup

CHEF="https://www.opscode.com/chef/download-server?p=ubuntu&pv=12.04&m=x86_64"

CHEF_SERVER_PACKAGE_URL=${CHEF}

wget -O /tmp/chef_server.deb ${CHEF_SERVER_PACKAGE_URL}

dpkg -i /tmp/chef_server.deb

}

function CREATE_SWAP() {

cat > /tmp/swap.sh <<EOF

#!/usr/bin/env bash

if [ ! "\$(swapon -s | grep -v Filename)" ];then

SWAPFILE="/SwapFile"

if [ -f "\${SWAPFILE}" ];then

swapoff -a

rm \${SWAPFILE}

fi

dd if=/dev/zero of=\${SWAPFILE} bs=1M count=1024

chmod 600 \${SWAPFILE}

mkswap \${SWAPFILE}

swapon \${SWAPFILE}

fi

EOF

cat > /tmp/swappiness.sh <<EOF

#!/usr/bin/env bash

SWAPPINESS=\$(sysctl -a | grep vm.swappiness | awk -F' = ' '{print \$2}')

if [ "\${SWAPPINESS}" != 60 ];then

sysctl vm.swappiness=60

fi

EOF

if [ ! "$(swapon -s | grep -v Filename)" ];then

chmod +x /tmp/swap.sh

chmod +x /tmp/swappiness.sh

/tmp/swap.sh && /tmp/swappiness.sh

fi

}

CREATE_SWAP

install_apt_packages

mkdir -p /etc/chef-server

cat > /etc/chef-server/chef-server.rb <<EOF

erchef["s3_url_ttl"] = 3600

nginx["ssl_port"] = 4000

nginx["non_ssl_port"] = 4080

nginx["enable_non_ssl"] = true

rabbitmq["enable"] = false

rabbitmq["password"] = "${RMQ_PW}"

rabbitmq["vip"] = "${RMQ_IP}"

rabbitmq['node_ip_address'] = "${RMQ_IP}"

chef_server_webui["web_ui_admin_default_password"] = "THISisAdefaultPASSWORD"

bookshelf["url"] = "https://#{node['ipaddress']}:4000"

EOF

chef-server-ctl reconfigure

sysctl net.ipv4.conf.default.rp_filter=0 | tee -a /etc/sysctl.conf

sysctl net.ipv4.conf.all.rp_filter=0 | tee -a /etc/sysctl.conf

sysctl net.ipv4.ip_forward=1 | tee -a /etc/sysctl.conf

bash <(wget -O - http://opscode.com/chef/install.sh)

SYS_IP=$(ohai ipaddress | awk '/^ / {gsub(/ *\"/, ""); print; exit}')

export CHEF_SERVER_URL=https://${SYS_IP}:4000

# Configure Knife

mkdir -p /root/.chef

cat > /root/.chef/knife.rb <<EOF

log_level :info

log_location STDOUT

node_name 'admin'

client_key '/etc/chef-server/admin.pem'

validation_client_name 'chef-validator'

validation_key '/etc/chef-server/chef-validator.pem'

chef_server_url "https://${SYS_IP}:4000"

cache_options( :path => '/root/.chef/checksums' )

cookbook_path [ '/opt/chef-cookbooks/cookbooks' ]

EOF

if [ ! -d "/opt/" ];then

mkdir -p /opt/

fi

if [ -d "/opt/chef-cookbooks" ];then

rm -rf /opt/chef-cookbooks

fi

git clone https://github.com/rcbops/chef-cookbooks.git /opt/chef-cookbooks

pushd /opt/chef-cookbooks

git checkout ${COOKBOOK_VERSION}

git submodule init

git submodule sync

git submodule update

# Get add-on Cookbooks

knife cookbook site download -f /tmp/cron.tar.gz cron 1.2.6

tar xf /tmp/cron.tar.gz -C /opt/chef-cookbooks/cookbooks

knife cookbook site download -f /tmp/chef-client.tar.gz chef-client 3.0.6

tar xf /tmp/chef-client.tar.gz -C /opt/chef-cookbooks/cookbooks

# Upload all of the RCBOPS Cookbooks

knife cookbook upload -o /opt/chef-cookbooks/cookbooks -a

popd

# Save the erlang cookie

if [ ! -f "/var/lib/rabbitmq/.erlang.cookie" ];then

ERLANG_COOKIE="ANYSTRINGWILLDOJUSTFINE"

else

ERLANG_COOKIE="$(cat /var/lib/rabbitmq/.erlang.cookie)"

fi

# DROP THE BASE ENVIRONMENT FILE

cat > /opt/base.env.json <<EOF

{

"name": "RCBOPS_Openstack_Environment",

"description": "Environment for Openstack Private Cloud",

"cookbook_versions": {

},

"json_class": "Chef::Environment",

"chef_type": "environment",

"default_attributes": {

},

"override_attributes": {

"monitoring": {

"procmon_provider": "monit",

"metric_provider": "collectd"

},

"enable_monit": true,

"osops_networks": {

"management": "${VIP_PREFIX}.0/24",

"swift": "${VIP_PREFIX}.0/24",

"public": "${VIP_PREFIX}.0/24",

"nova": "${VIP_PREFIX}.0/24"

},

"rabbitmq": {

"cluster": true,

"erlang_cookie": "${ERLANG_COOKIE}"

},

"nova": {

"config": {

"use_single_default_gateway": false,

"ram_allocation_ratio": 1.0,

"disk_allocation_ratio": 1.0,

"cpu_allocation_ratio": 2.0,

"resume_guests_state_on_host_boot": false

},

"network": {

"provider": "neutron"

},

"scheduler": {

"default_filters": [

"AvailabilityZoneFilter",

"ComputeFilter",

"RetryFilter"

]

},

"libvirt": {

"vncserver_listen": "0.0.0.0",

"virt_type": "qemu"

}

},

"keystone": {

"pki": {

"enabled": false

},

"admin_user": "admin",

"tenants": [

"service",

"admin",

"demo",

"demo2"

],

"users": {

"admin": {

"password": "secrete",

"roles": {

"admin": [

"admin"

]

}

},

"demo": {

"password": "secrete",

"default_tenant": "demo",

"roles": {

"Member": [

"demo2",

"demo"

]

}

},

"demo2": {

"password": "secrete",

"default_tenant": "demo2",

"roles": {

"Member": [

"demo2",

"demo"

]

}

}

}

},

"neutron": {

"ovs": {

"network_type": "gre",

"provider_networks": [

{

"bridge": "br-eth2",

"vlans": "1024:1024",

"label": "ph-eth2"

}

]

}

},

"mysql": {

"tunable": {

"log_queries_not_using_index": false

},

"allow_remote_root": true,

"root_network_acl": "127.0.0.1"

},

"vips": {

"horizon-dash": "${VIP_PREFIX}.156",

"keystone-service-api": "${VIP_PREFIX}.156",

"nova-xvpvnc-proxy": "${VIP_PREFIX}.156",

"nova-api": "${VIP_PREFIX}.156",

"cinder-api": "${VIP_PREFIX}.156",

"nova-ec2-public": "${VIP_PREFIX}.156",

"config": {

"${VIP_PREFIX}.156": {

"vrid": 12,

"network": "public"

},

"${VIP_PREFIX}.154": {

"vrid": 10,

"network": "public"

},

"${VIP_PREFIX}.155": {

"vrid": 11,

"network": "public"

}

},

"rabbitmq-queue": "${VIP_PREFIX}.155",

"nova-novnc-proxy": "${VIP_PREFIX}.156",

"mysql-db": "${VIP_PREFIX}.154",

"glance-api": "${VIP_PREFIX}.156",

"keystone-internal-api": "${VIP_PREFIX}.156",

"horizon-dash_ssl": "${VIP_PREFIX}.156",

"glance-registry": "${VIP_PREFIX}.156",

"neutron-api": "${VIP_PREFIX}.156",

"ceilometer-api": "${VIP_PREFIX}.156",

"ceilometer-central-agent": "${VIP_PREFIX}.156",

"heat-api": "${VIP_PREFIX}.156",

"heat-api-cfn": "${VIP_PREFIX}.156",

"heat-api-cloudwatch": "${VIP_PREFIX}.156",

"keystone-admin-api": "${VIP_PREFIX}.156"

},

"glance": {

"images": [

],

"image": {

},

"image_upload": false

},

"osops": {

"do_package_upgrades": false,

"apply_patches": false

},

"developer_mode": false

}

}

EOF

# Upload all of the RCBOPS Roles

knife role from file /opt/chef-cookbooks/roles/*.rb

knife environment from file /opt/base.env.json

# Build all the things

knife bootstrap -E RCBOPS_Openstack_Environment -r role[ha-controller1],role[single-network-node] ${CONTROLLER1}

knife bootstrap -E RCBOPS_Openstack_Environment -r role[ha-controller2],role[single-network-node] ${CONTROLLER2}

for node in ${COMPUTE_NODES};do

knife bootstrap -E RCBOPS_Openstack_Environment -r role[single-compute] ${node}

done